Decision Trees

Decision Trees are among the most widely used and powerful machine learning algorithms, primarily due to their simplicity, interpretability, and versatility. Commonly applied in classification and regression tasks, Decision Trees offer an intuitive approach to data analysis by breaking down complex decision-making into manageable steps.

|

| Decision trees are flowchart-like models used to make decisions or predict outcomes by splitting data based on conditions at each branch. |

1. What is a Decision Tree?

Definition and Basic Concept

A Decision Tree is a supervised learning algorithm that splits data into branches based on decision rules, resembling a tree structure. It’s commonly used for both classification (predicting categories) and regression (predicting continuous values). The model consists of a root node (the starting point), decision nodes (where data splits occur), and leaf nodes (where final outcomes or predictions are reached).

Real-World Analogy

Imagine you’re deciding whether to bring an umbrella based on the weather. At the top of your decision-making process is a root question: “Is it raining?” Based on the answer, you either decide to bring an umbrella or not. Similarly, Decision Trees follow a chain of logical steps based on input data features to reach a final prediction.

2. Core Components of Decision Trees

Nodes

- Root Node: The starting point, containing the entire dataset.

- Decision Nodes: Points where data is split based on specific features or rules.

- Leaf Nodes: The endpoints of the tree, representing final predictions or classes.

Branches

Branches represent possible choices or outcomes of each decision point, connecting the nodes.

Splitting

Splitting is the process of dividing nodes based on feature values. Each split in the data is determined by specific criteria aimed at reducing uncertainty in predictions, such as Gini Impurity or Entropy.

Pruning

Pruning is the technique of removing branches that do not contribute significantly to the model’s accuracy, thereby preventing overfitting.

Criteria for Splitting

Decision Trees use criteria such as:

- Gini Impurity: Measures the probability of a randomly chosen element being incorrectly classified.

- Entropy (Information Gain): Represents the disorder within a dataset, helping to decide the best splits by reducing uncertainty.

- Variance Reduction: Applied in regression trees, where splits minimize variance within each subset.

3. Types of Decision Trees

Classification Trees

These are used for categorical outcomes. Classification Trees predict labels such as “spam” or “not spam” for emails, or “yes” or “no” for binary outcomes.

Regression Trees

Regression Trees predict continuous numerical values. For instance, they can be used to estimate a house’s price based on features like square footage, location, and number of rooms.

CART (Classification and Regression Tree)

The CART algorithm is the most common implementation, capable of both classification and regression, and forms the basis for many popular tree-based algorithms.

4. Building a Decision Tree

Step-by-Step Construction Process

- Select the Best Split: Starting from the root, the algorithm identifies the best feature and threshold to split the data, using Gini Impurity or Entropy.

- Recursively Split the Data: Each new subset is split further based on the best feature, creating a deeper tree.

- Stopping Criteria: The tree stops growing when certain conditions are met, such as a maximum depth or a minimum number of samples per leaf node.

- Pruning: After the initial construction, pruning is applied to simplify the tree, enhancing generalization on new data.

Example of Decision Tree Creation

Consider a dataset of patients with features such as age, blood pressure, and weight to predict a binary outcome: whether they have a specific medical condition. The tree would start at the root with the most influential feature, splitting data based on thresholds that most effectively separate patients into different outcome classes.

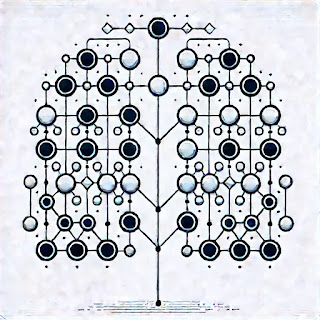

Visual Representation of a Decision Tree

Visually, a Decision Tree is represented as a hierarchical structure. This makes interpretation straightforward, as each decision path corresponds to logical steps based on feature values.

5. Advantages and Limitations of Decision Trees

Advantages

- Interpretability: Decision Trees are highly interpretable, as each split and decision path can be traced to specific features.

- Non-Parametric Nature: They do not assume any underlying data distribution, making them versatile for varied datasets.

- Handling of Both Types of Variables: They can process both numerical and categorical data without needing transformation.

Limitations

- Overfitting: Decision Trees are prone to overfitting, especially with deep trees. Pruning helps mitigate this.

- Instability: Small changes in data can lead to large structural changes in the tree, affecting predictions.

- Bias Toward Dominant Classes: In imbalanced datasets, Decision Trees might favor the majority class, requiring techniques like stratified sampling.

6. Optimization Techniques for Decision Trees

Pruning Methods

- Pre-Pruning (Early Stopping): Stopping tree growth during construction when a stopping criterion is reached.

- Post-Pruning: Pruning branches after the tree is fully grown, removing those with minimal contribution to overall accuracy.

Hyperparameter Tuning

Hyperparameters, such as maximum tree depth, minimum samples per leaf, and minimum samples per split, significantly impact model performance. Tuning these parameters helps balance bias and variance, optimizing model accuracy.

Cross-Validation

Cross-validation is critical for assessing a tree’s stability and reliability. Techniques like k-fold cross-validation ensure that the model’s performance is tested across multiple subsets, providing a more accurate assessment.

Ensemble Methods

Ensemble techniques like Random Forest and Gradient Boosting combine multiple Decision Trees to enhance performance:

- Random Forest: Builds multiple Decision Trees using different data subsets and averages their predictions.

- Gradient Boosting: Sequentially builds trees, each correcting the errors of the previous one, improving accuracy but at the expense of increased training time.

7. Evaluating Decision Tree Models

Common Evaluation Metrics

- Accuracy: Percentage of correct predictions over total predictions.

- Precision and Recall: Key metrics in classification to evaluate how well the model handles imbalanced classes.

- Mean Squared Error (MSE): Applied to regression trees, measuring the average squared difference between predicted and actual values.

Confusion Matrix for Classification Trees

A Confusion Matrix displays true positives, false positives, true negatives, and false negatives, providing insights into prediction quality and potential model improvements.

ROC and AUC

The Receiver Operating Characteristic (ROC) curve and Area Under the Curve (AUC) metric are helpful for evaluating the trade-off between true positive and false positive rates, especially in classification trees.

8. Applications of Decision Trees

Healthcare

Decision Trees help in diagnosing diseases, suggesting treatments, and predicting patient outcomes. By analyzing factors such as symptoms, age, and medical history, these trees aid healthcare providers in making informed decisions.

Financial Industry

Decision Trees are instrumental in credit risk analysis, fraud detection, and customer segmentation. Banks and financial institutions rely on these models to evaluate creditworthiness and detect abnormal transaction patterns.

Marketing and Customer Segmentation

Marketers use Decision Trees to classify customers based on demographics, purchasing behavior, and preferences. By predicting customer responses to marketing efforts, Decision Trees enable targeted strategies and personalized outreach.

Manufacturing and Quality Control

Decision Trees in manufacturing can identify factors impacting product quality, helping in early detection of defects and improving overall production efficiency.

9. Advanced Concepts: Random Forests and Gradient Boosting

While Decision Trees are powerful on their own, advanced algorithms build on their foundation to improve accuracy and performance.

Random Forest

Random Forest combines multiple Decision Trees trained on different data subsets, resulting in a more robust model with reduced overfitting. It’s particularly useful for handling high-dimensional data and achieving high accuracy on complex tasks.

Gradient Boosting Machines (GBMs)

GBMs build trees sequentially, where each tree attempts to correct the errors of the previous one. This results in a model with exceptional accuracy, commonly used in competitive machine learning tasks. XGBoost and LightGBM are popular implementations of Gradient Boosting.

Comparison of Random Forest and Gradient Boosting

- Random Forest: Low computational cost, robust to overfitting, and good for large datasets.

- Gradient Boosting: Higher computational cost, better at fine-tuning for accuracy, but requires careful tuning to avoid overfitting.

10. Future Directions and Research in Decision Tree Algorithms

Decision Trees and their ensemble methods are continually evolving. Researchers are working on new techniques to improve scalability, computational efficiency, and interpretability.

Interpretability in Complex Models

Despite the rise of black-box models like deep neural networks, there is an increasing emphasis on interpretability, especially in high-stakes industries. Research on Decision Trees focuses on enhancing this transparency without sacrificing predictive power.

AutoML and Decision Trees

Automated Machine Learning (AutoML) frameworks, such as TPOT and H2O.ai, allow users to automatically optimize Decision Tree-based models, reducing the need for manual tuning.

Decision Trees stand out as a foundational machine learning algorithm with extensive applications across various industries. Their straightforward, interpretable structure makes them ideal for decision-making scenarios, particularly where transparency is required. Through proper tuning, pruning, and use of ensemble methods like Random Forests and Gradient Boosting, Decision Trees can be optimized to deliver impressive performance and accuracy.

As machine learning advances, Decision Trees will remain an essential tool for both beginner and experienced data scientists, capable of addressing complex predictive problems with efficiency and clarity. From healthcare to finance, Decision Trees continue to shape the future of machine learning applications, solidifying their place as one of the most effective and versatile algorithms in the field.